TinyML represents a revolutionary trend which is becoming increasingly popular within the rapidly evolving artificial intelligence (AI) domain. The innovative deployment technique uses machine learning models on power-efficient embedded systems to establish connections between AI solutions in the cloud and emerging requirements for distributed computing at the edge. Through continuous innovation by semiconductor services and embedded product designers TinyML positions to redefine various industries through its ability to bring intelligent capabilities to fundamentally small devices.

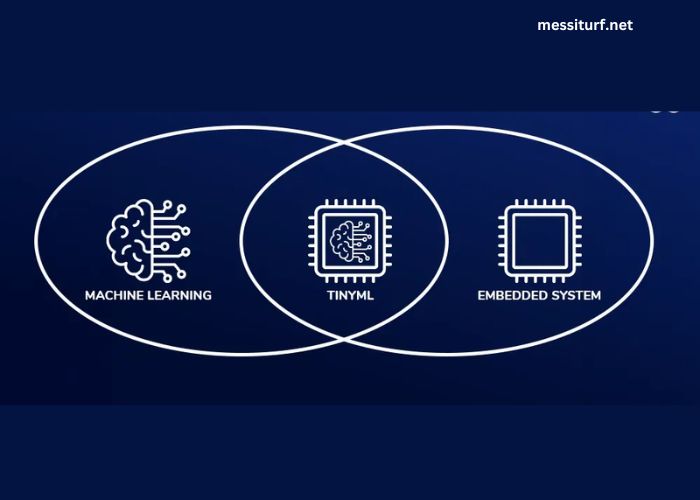

Understanding TinyML: The Convergence of ML and Embedded Systems

With TinyML two fields converge to power artificial intelligence algorithms which operate on basic microcontroller systems that have restricted computing resources available. The design of TinyML models enables operation in limited environments because they require minimal memory capacity below kilobytes and minimal power supply of milliwatts.

TinyML possesses its core innovation through compression models along with efficiency algorithms and specialized hardware built by semiconductor service providers. The advancements enable complex neural networks to become smaller through minimization processes which make them suitable for microcontroller applications while maintaining satisfactory accuracy in targeted scenarios.

The Technical Foundation: How TinyML Works

The fundamental operation of TinyML depends on a sophisticated process starting from traditional model development and finishing at implementation on devices with limited resources. The standard training process of developers happens when they apply machine learning frameworks to create full-sized models. Upon undergoing quantization processing the model parameters transform from their original 32-bit floating-point number format to compact integer versions which minimize memory needs.

The inner value of product design services drives the transformation by creating hardware accelerators and software frameworks optimized for efficient execution of compressed models. Proficient embedded designing firms should optimize their system architecture to meet both performance standards and energy restrictions in their development process.

The TinyML workflow typically involves:

- The process includes training standard ML frameworks to develop a model.

- The model receives optimized performance after both quantization and pruning compressive methods.

- The developer needs to transform the model for operation on embedded systems.

- The implementation process for microcontrollers requires specialized frameworks to deploy the model.

- The system performs continuous monitoring and supports automated performance updates delivered directly through wireless connections known as over-the-air updates.

Applications Driving TinyML Adoption

Local AI model operation on devices with minimal power consumption enables previously unachievable applications. Wearable devices with TinyML capability can track vital signs over extended periods of time through continuous monitoring because they operate independently from cloud connection. The capability provides better healthcare service as well as protects patient privacy through local storage of sensitive health information.

Agricultural applications with TinyML sensors perform extremely long lasting battery-powered soil analysis and crop observations and pest detection within a single device unit. Embedded product design services together with agricultural experts develop smart agricultural systems through collective development to enhance yield along with lower resource consumption.

Industrial applications are equally transformative. The use of TinyML-based predictive maintenance sensors helps identify future equipment breakdowns which prevents shutdowns of equipment. Joint development involving semiconductor service providers enables these systems to function in challenging conditions where stable connections are not reliable thus delivering AI functionality to areas that were inadequately served.

Challenges in TinyML Development

TinyML solution development encounters specific difficulties along with its great potential benefits. Model complexity fights against resource limitations as the main challenge that prevents TinyML development progress. The development of TinyML systems demands mastery of microcontroller architecture and machine learning fundamentals which exists in limited quantities among professionals.

Semiconductor service providers must develop processing systems which combine enough AI performance with extremely low power requirements. Developers have created specialized neural processing units (NPUs) in addition to other acceleration technologies that cater to TinyML applications.

Other challenges include:

- The task is to balance the performance of AI models with available power sources and available system memory resources.

- Different operating conditions demand performance stability throughout the system

- The development of suitable testing approaches for embedded systems that utilize AI.

- Standardized development frameworks which simplify the deployment process need to be created.

- Security measures need to be implemented for protecting edge AI hardware systems.

The Ecosystem: Key Players and Technologies

Various industries that encompass end-users utilize semantic microcontrollers and sensor network systems for TinyML applications. They plus semiconductor manufacturers together with embedded product design services and software framework developers operate within the TinyML ecosystem. Major semiconductor service providers now offer dedicated hardware solutions that support TinyML applications through power-efficient processors in addition to dedicated AI processors.

The software development became simpler with the arrival of TensorFlow Lite for Microcontrollers and Edge Impulse software frameworks. Through their platforms these solutions eliminate the divide between conventional machine learning streams and hardware limitations of embedded systems so developers with no specialized knowledge of embedded design can make TinyML applications.

Future Trends: What’s Next for TinyML

TinyML is undergoing significant changes which will specify its future developments. Semiconductor development leads to improvements in TinyML capabilities that will operate at diminishing sizes. Several new patterns have emerged but require our observation:

TinyML platforms enhanced with dual or triple sensing modalities will enable developers to produce more advanced functionality. A TinyML device that integrates audio with visual and motion detection capabilities will deliver heightened application context with minimized power usage.

Conclusion

TinyML as a technology represents a complete change in our thoughts about deploying artificial intelligence systems. Embedded devices have become smarter because this technology delivers artificial intelligence directly to ultra-low-power devices so they can perform without relying on cloud connections.

The emerging technology TinyML offers interesting chances to businesses that specialize in embedded product development together with semiconductor services. Individuals who understand this new technology field will have the capacity to generate forward-thinking hybrid solutions between physical products and digital systems. Embedded product design services will improve their TinyML capabilities which will lead to sophisticated industry applications that revolutionize human technology interactions throughout their daily lives.

Perspectives about AI development focus on massive data centers along with powerful smartphones yet its actual future lies in tiny devices which make our environment smarter while becoming more efficient to human needs. Today TinyML provides us with access to a smart future of technology.